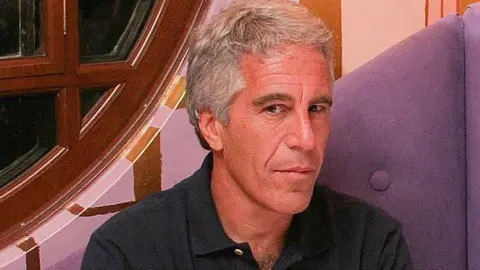

Elon Musk's AI video generator, Grok Imagine, is under fire for allegedly creating explicit deepfake videos featuring Taylor Swift without any user requests, emphasizing a troubling trend in AI's treatment of women. Law professor Clare McGlynn noted that this type of content is indicative of inherent misogynistic bias in AI technology, suggesting that companies like XAI are making conscious decisions to allow such abuses to occur.

Reports from The Verge indicate that the Grok Imagine's "spicy" mode rapidly produced uncensored topless videos of Swift just by selecting the option without any explicit instructions. McGlynn argued the lack of safeguards demonstrates a disregard for ethical responsibility, while also suggesting that platforms could implement measures to prevent such occurrences.

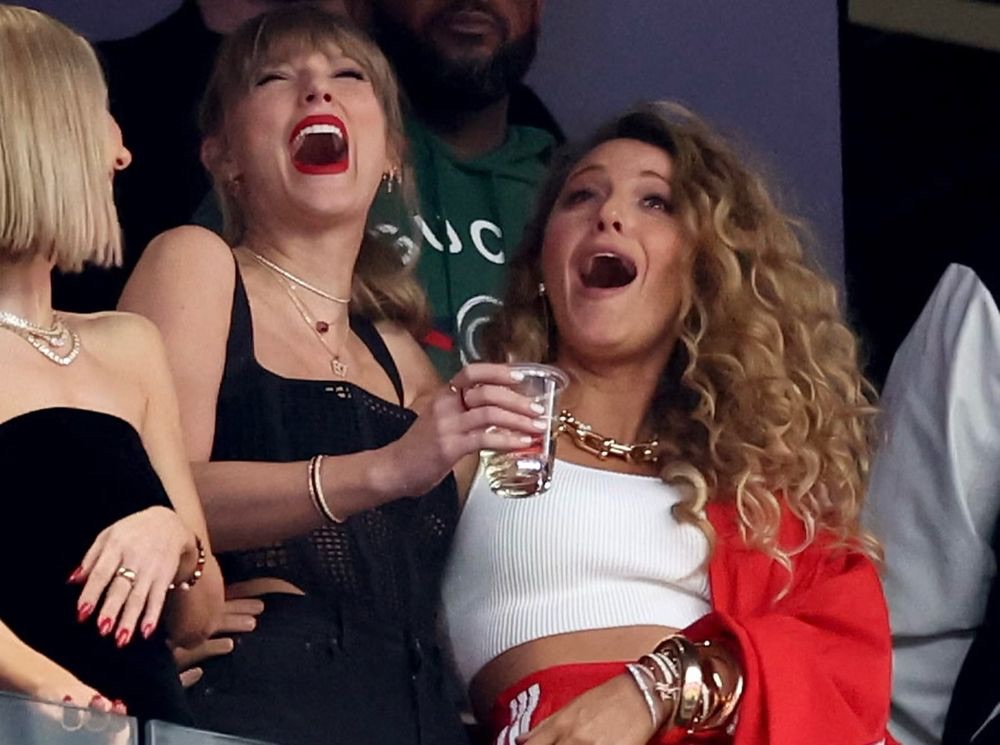

Taylor Swift has previously been a victim of deepfake technology, with explicit uses of her likeness having gone viral in early 2024. This incident reignited discussions on the need for stringent regulations regarding the creation of non-consensual deepfakes. Under UK law, generating pornographic deepfakes is illegal in instances of revenge porn or minor depictions; however, a proposed amendment aimed at making all non-consensual deepfake creation illegal is still pending approval.

Baroness Owen, who advocated for this legislative change, underscored the necessity for women to have control over their intimate imagery, urging the government to act swiftly on this pressing issue. The Ministry of Justice echoed these sentiments, highlighting that the creation of sexually explicit deepfakes without consent is inherently harmful.

The AI-generated deepfakes controversy serves as a stark reminder of the urgent need for regulatory frameworks to manage the evolving landscape of technology, particularly as it pertains to safeguarding individuals from the potential harms of AI misuse. Public figures like Taylor Swift remain at risk as societal and technological dynamics continue to blur the lines of personal consent and online representation.